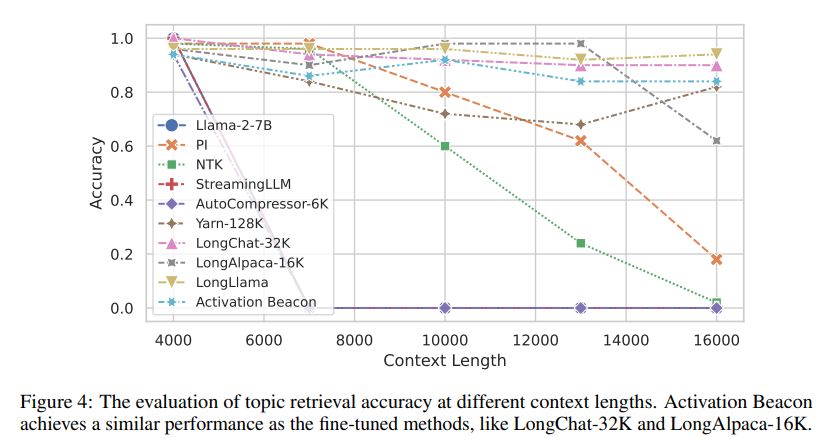

Activation Beacon is a plug-and-play module for large language models that allows them to process longer contexts with a limited context window, while preserving their original capabilities. It achieves competitive memory and time efficiency and can be trained efficiently with short-sequence data. When dealing with long-sequence data, it resorts to sliding windows for streaming processing, which leads to a superior working efficiency at both inference and training time. With the diversely sampled condensing ratios, it can be effectively learned to support the extensions for a wide scope of context lengths based on short-sequence training data. The experimental study verifies Activation Beacon as an effective, efficient, compatible, low-cost (training) method to extend the context length of LLM.